SSH as a service

This post starts a series of posts devoted to newly created SSH cloud service

Motivation for SSH service

SSH was and remains a handy tool for all network administrators. At cloud deployment we need a way to remotely SSH a VM in the cloud that is not connected

to the internet from outside of the cloud. Such task requires VPN.

Among disadvantages of VPN I can mention:

- VPN does not support internal, non-routable networks

- VPN is less secured since it allows access to all devices on all routable networks in the cloud

- Requires a special client

- Error-prone – client might set the VPN server as its default GW which will direct all its outgoing traffic to the cloud

Tenant administrator wants to setup service that will handle network connectivity, hide address details from outside user and at the same time save cloud floating IP addresses from being attached/detached according to current tenant users immediate request.

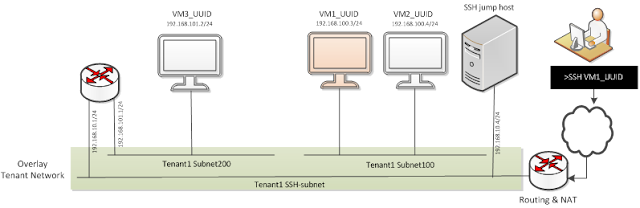

User wants to access virtual machine VM3 on cloud Tenant 1. VM3 is connected to routable private subnet 200:

|

| figure 1 |

SSH service concepts

First we need to register our target VM3 against deployed SSH service. SSH service deploy one or more service VMs (SSH jump host at figure 2) at tenant availability zone. The main purpose of service VM is to steer SSH connection securely to user's target VM. SSH service VM get connected to tenant router if not yet connected and registration phase finishes generating SSH configuration file for further VM3 access.

|

| figure 2 |

At last user may access VM3 smoothly. Routing is performed implicitly behind the scenes by SSH proxy agent at service VM and properly configured cloud network service.

|

| figure 3 |

Access command: ssh -F ssh_config VM3_UUID

User is not required to know IP address of accessed VM. Service is responsible to generate SSH configuration file to provision VM by its UUID

User is not required to know IP address of accessed VM. Service is responsible to generate SSH configuration file to provision VM by its UUID

SSH service dependencies

SSH service is based on cloud services provided by keystone,neutron and nova. These base services accessed at SSH service internally via REST API and corresponding service client.

SSH keys and SSH proxy agent

SSH allows to perform session steering via configured SSH proxy agent. All details are hidden in SSH configuration file. High security level is built into service due to asymmetric keys security scheme as a sole authentication mechanism.

Host target_VM_UUID

IdentityFile target_key.pem

HostName

54.65.8.21

User target_user

ProxyCommand ssh -i gate_key.pem -W gate_user@%h:%p gate_VM

Proxy command functions as a SSH session steering wheel. Prior to accessing target VM user must authenticate at SSH gate with same/different private key. Proxy command uses private key to access gate. Its public key mate must be installed at SSH gate prior to authentication attempt takes place.

Service API provide a CRUD command set to manage gate keys. Since password authentication is disabled at SSH gate - insertion/removal of new public key into/from gate is performed authenticating with help of another internal SSH key pair that serves as a management entry point into gate for service administrator.

SSH service API

One of the most desirable service features is to allow target VM provisioning via UUID instead of IP address. This valuable feature comes as a free gift from neutron port DNS service plugin.

- At reference implementation SSH jump host is referred to as SSH gate.

- project_name parameter is added implicitly to every API call

# create gate

openstack gate create <gate_name>

other optional parameters to this fundamental API are:

--image=<image_name>

--flavor=<service_vm_flavor>

--net_id=<net_id> <= private subnet to deploy this service VM on

--project-name=<project_name>

# create SSH key pair if required

ssh-keygen -q -N "" -f gate_key

# add authentication key to gate

openstack gate add key <gate_name> <public_key_file> <key_name>

# create target profile that includes private key name, username and target VM name

openstack target create profile

--target=<target_name>

--user=<user_name>

--private-key=<key-file>

<profile_name>

# add profile to gate

openstack gate add target <gate_name> <profile_name>

# generate ssh configuration file for given target profile and pint it to output

openstack target ssh config

--gate=<gate_name>

--profile-name=<profile_name>

API should have a complete set of CRUD operation to manage gate keys, target profiles and gates

SSH deployment

New SSH service has a standard openstack plugin deployment scheme.

- enable_plugin knob https://github.com/igor-toga/knob2.git

Above line in local.conf installs the service code and its dependencies, registers endpoints, updates service database and starts service daemon

SSH gate image hardening and gate keys management

Next phase of service development can be SSH gate image hardening and different key management scheme. The current POC implementation drawback and security weakness is a modifiable gate image. As matter of a fact only SSH keys get modified at gate image. Gate image can mount at boot small NFS partition that holds public SSH keys. Such enhancement requires additional service configuration effort to couple SSH service with openstack Glance service

Useful resources:

NOTE: reference implementation has an API set that slightly differs from desired command set listed here.

Comments

Post a Comment