Distributed SNAT - examining alternatives

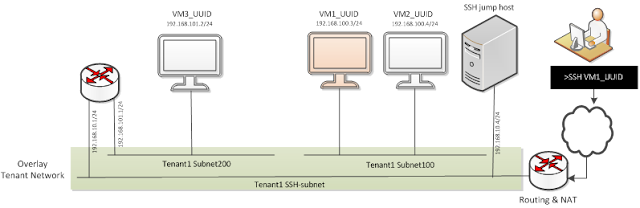

SNAT feature remains one of the most common network features in cloud network deployment that still has no agreed distributed solution. While Distrubuted Virtual router solves local connectivity for floating IP and simplifies east-west VM communication SNAT is still deployed at Network node and remains a traffic bottleneck.

Above figure has 2 deployed tenants 'green' and 'orange'. SNAT is performed centrally at network node.

Maximum address consumption equals to:

[# of compute nodes] * [# of tenants]

Cloud deployment may consume external address in a lazy way to optimize external address consumption. External address should be allocated and assigned only when first VM of requested tenant is 'born' on chosen compute node and connected routable internal network. At above figure only when VM2 of 'orange' tenant is born external address 172.24.4.2 got allocated.

For cloud deployment with high limit of external addresses or deployment having another NAT beyond cloud edge this NAT model may give additional value. Neutron database should track all additional gateway router ports. External addresses should be provisioned implicitly from separate external address pool.

Proof of concept implementation for this SNAT model base on neutron stable/mitaka branch is here and is being discussed in this post. This implementation has number easing assumptions that require modification in future enhancement round.

Among them:

|

| figure 1 |

Above figure has 2 deployed tenants 'green' and 'orange'. SNAT is performed centrally at network node.

Possible solutions

The are number of proposed solution to decentralize SNAT. Each solution has its own benefits and drawbacks.1. SNAT per (tenant, router) pair

The most straightforward solution is to perform SNAT at compute node router instance. However while DVR deployment is copying internal subnet router address across compute nodes router's IP address on external network can not be copied. Such a deployment will consume extra external address per (router, tenant) pair. |

| figure 2 |

Maximum address consumption equals to:

[# of compute nodes] * [# of tenants]

Cloud deployment may consume external address in a lazy way to optimize external address consumption. External address should be allocated and assigned only when first VM of requested tenant is 'born' on chosen compute node and connected routable internal network. At above figure only when VM2 of 'orange' tenant is born external address 172.24.4.2 got allocated.

For cloud deployment with high limit of external addresses or deployment having another NAT beyond cloud edge this NAT model may give additional value. Neutron database should track all additional gateway router ports. External addresses should be provisioned implicitly from separate external address pool.

Proof of concept implementation for this SNAT model base on neutron stable/mitaka branch is here and is being discussed in this post. This implementation has number easing assumptions that require modification in future enhancement round.

Among them:

- Explicit external address allocation per (router, compute node) pair that required client API modification.

2. SNAT per compute node

This SNAT model limits external address consumption to single address per compute node greatly optimizing network resources use. However this model has at least one noticeable drawback. When external service serves multiple tenants via single external IP address - service abuse performed by VM of one tenant may cause denial of service to VM of another tenant that lays on same compute host.

|

| figure 3 |

Above figure shows that SNAT rule implemented by both tenants translating multiple tenant VMs addresses into single external address. Reverse translation should restore tenant information IP/MAC address and other tenant related information. On order to visualize this complex translation figure 3 shows two routing entities. In reality this translation could be performed by different routing tables in same routing entity.

DragonFlow implementation

This model was proposed and implemented in pretty elegant manner with DragonFlow service plugin of neutron.

DragonFlow deploys Ryu SDN controller on every compute node. When Nova scheduler asks Neutron server to allocate network port for VM the following happens:

DragonFlow deploys Ryu SDN controller on every compute node. When Nova scheduler asks Neutron server to allocate network port for VM the following happens:

- Neutron server passes port allocation request to DragonFlow ML2 plugin. ML2 plugin pushes new neutron port information into separate DragonFlow database in parallel to regular neutron DB update

- ML2 plugin informs relevant compute node where VM should be 'born' regarding port update.

- L3 agent on target compute node fetch port information from database and build a Ryu style update event with port information in it.

- Ryu SDN controller push update event to all registered SDN application

- Every application that registered for this specific event [local neutron port created] may insert/update OVS flows

New SDN application that takes care of install/uninstall SNAT related OVS flows was the only new component to enable distributed SNAT model.

Comments

Post a Comment